Are We Over-Emphasising The Technical Overhead In Blockchain Networks?

This post reflects my personal opinions.

For those of you who are still here, thanks for staying around. I aim to start posting more again going forward, so stay tuned!

Blockchain networks are coordination machines, enabling participants from around the world to collaborate along a set of commonly agreed rules without the need for trusted third parties to facilitate it.

This offers a fundamentally different trust model from the current status quo - enabling us to move from pure reputation-based systems to ones backed by crypto-economic or cryptographic guarantees.

The technology offers a lot to be excited about - particularly around reducing intermediaries and enabling efficient resource allocation on a global scale. However, an often-heard criticism of blockchain networks is the additional cost and compute overhead of the decentralized model.

Does the inefficiency of blockchain networks matter?

TLDR: Yes, but not as much as it might seem - it’s only a part of the larger picture.

Where Does The Inefficiency Stem From?

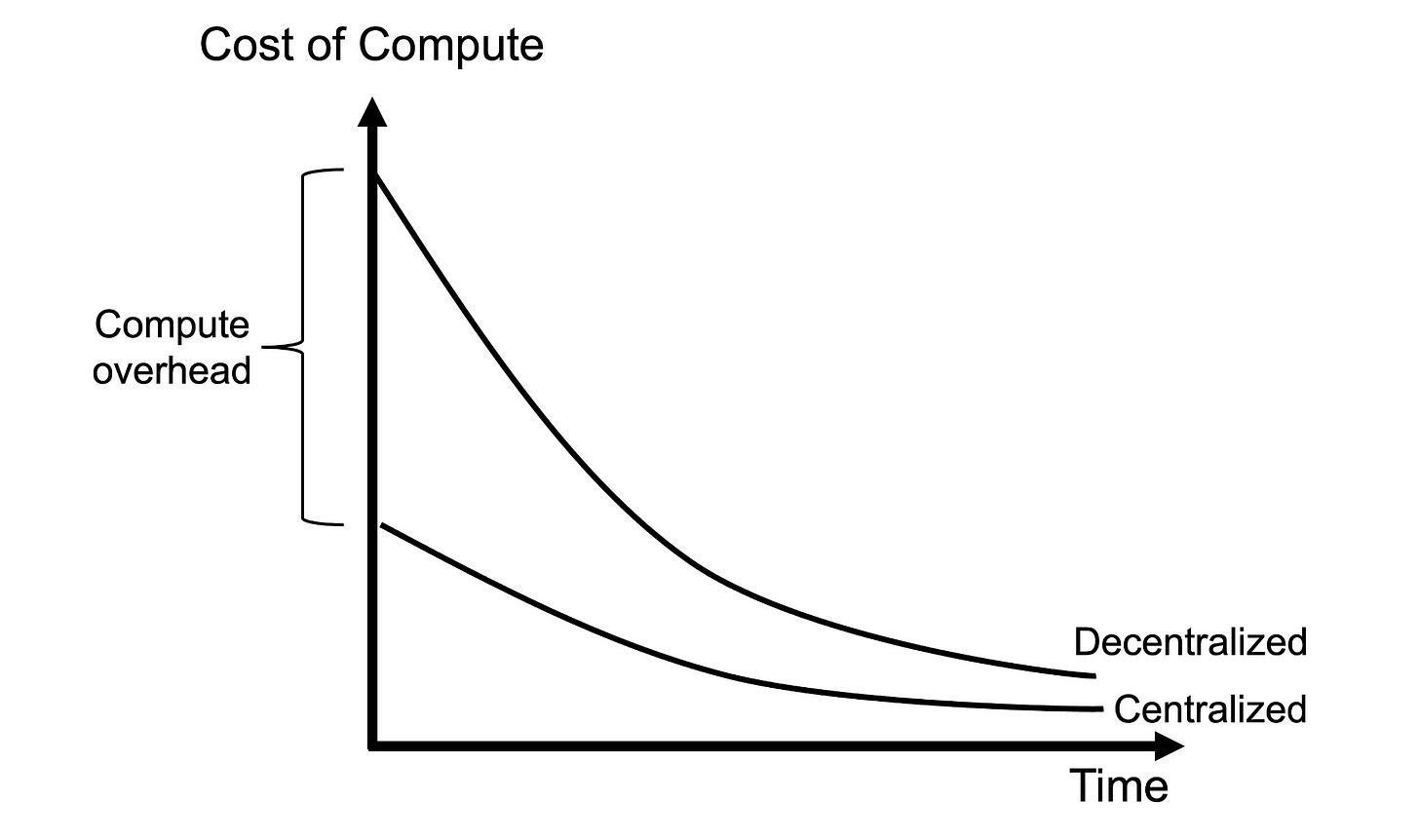

If we purely compare computational efficiency, blockchain networks (and distributed systems at large) introduce a lot of overhead compared to the centralized model. This overhead comes from additional functions such as P2P messaging, consensus, replication etc. Having 10,000 machines re-execute the same computation globally and communicate with each other is inefficient.

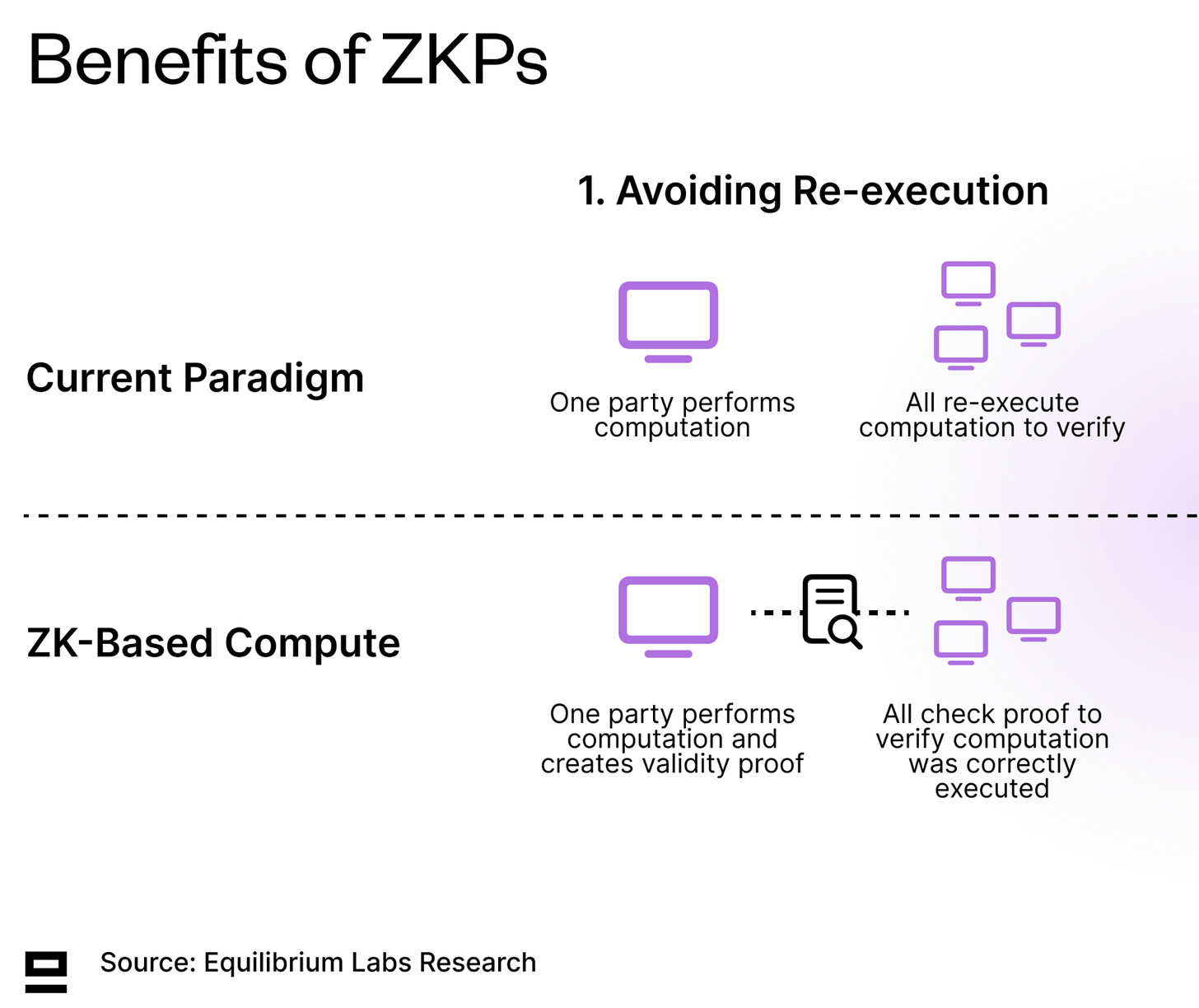

Innovation and technical progress can reduce this overhead. One example is zero-knowledge proofs (ZKPs) that enable us to avoid re-execution and move from a model of “all compute to verify” to “only one computes, all verify” (N/N → 1/N model). This is a paradigm shift for verifiable compute.

While it’s still relatively expensive to generate ZK proofs compared to simply executing the computation, the cost is rapidly declining. However, even if the additional cost of proving would approach zero over time (a big if), there would still be overhead from other areas than execution, such as P2P communication, consensus, proof verification, and some redundancy.

In other words, we can never reach the centralized benchmark from a perspective of hardware utilisation and cost of compute.

This isn’t as concerning as it might seem though… Let me explain.

Why Doesn’t Computational Overhead Matter As Much?

The compute overhead is an important metric for infrastructure developers in determining how far from the centralized benchmark we are (we still have a long way to go). Over time, we should aim to get asymptotically closer by improving the ratio through R&D and new technological leaps (ZKPs being one good example of this).

However, simply focusing on compute costs misses the broader point as this is often just a part of the costs of an application - sometimes a very small one. Instead, we should compare the total cost for an application built on a blockchain network vs a centralized manner.

More specifically, we should ask what benefits the decentralized option can enable and how it can improve the current status quo. The flip side of the higher compute cost from building on top of blockchain networks is benefits such as higher redundancy and fault tolerance, fewer intermediaries, effective resource allocation on a global scale and potentially easier coordination.

What Does Total Cost Include i.e. What Should We Consider?

Besides the compute costs, the cost to end-consumers of a product or service reflects the direct costs related to producing it (e.g. capital costs - both human and financial), the pricing power of existing businesses (monopolistic/duopolistic market structure vs highly competitive market), the number of rent-seeking intermediaries that are part of the value chain and more.

Any attempt to simplify or streamline some of these areas can lead to a net improvement if the sum of the benefits exceeds the increase in compute costs. Another angle is adding additional features or guarantees that consumers are willing to pay more for (if the willingness to pay exceeds the potential additional costs).

Today, there are other frictions for developing blockchain-based applications, such as user-facing issues (UX/UI) and less support for product development, which make it an uphill battle.

Despite these, we are already seeing that blockchain-based applications can be competitive and challenge existing applications in several industries.

So what are some examples that demonstrate the additional benefits or help compare the total costs of the two solutions? Let’s take a look:

Examples Of Blockchain-Based Applications

Faster Settlement: Current blockchains can settle in the span of seconds to minutes. Compare this to the traditional financial system where it typically takes hours to several business days to settle trades and transfers. As an example - what are the cost savings a merchant gets from (almost) instant settlement of payments? At the very least, the opportunity cost of capital, i.e. a few day’s worth of interest. For businesses with large turnover, this can be a significant cost-saving (particularly relevant for low-margin businesses).

Fewer Intermediaries: With fewer rent-seeking parties trying to get a share of the pie, the total cost to the end user should decrease. However, this only works if markets are competitive enough with low barriers to entry for newcomers (see below). Fewer intermediaries also lead to less required coordination between the different parties, which can bring further efficiency gains and minimize the room for error.

Lowering Barriers To Entry: There are many examples of industries dominated by one or a few big companies, such as the app store duopoly, social media networks (user lock-in and strong network effects) or large-scale physical networks (e.g. telecoms). The high barriers to entry can be due to factors such as high capital requirements, regulatory requirements, network effects, etc. One example of how blockchain-based applications can compete in these industries is by turning the capital structure on its head. Rather than raising large amounts of capital upfront, they can bootstrap a network with the help of early token incentives. Examples include Helium (telecom) and Hivemapper (mapping).

Offering Additional Features or Guarantees That Users Care About - Either doing an existing task better or providing a new feature. One example of this is NFT-based ticketing which has the potential to make ticketing fairer by ensuring fans can buy tickets without being front-run by scalping bots that resell tickets later on secondary markets (e.g. OPEN Ticketing). They also offer new ways to prolong and deepen the relationship between artists and fans, through things like collectibles and post-event drops.

Tapping Into Unused Resources: Crypto is global and permissionless, which means it’s quite efficient at finding the cheapest possible resource globally - whether that’s compute, bandwidth, energy etc. It also enables tapping into dormant resources, such as allowing individuals to rent out their CPU/GPU whenever they aren’t using it through a decentralized compute network. This helps reduce total costs by cutting intermediaries and through the low marginal cost at which users are willing to rent out their hardware. On the flip side, it might also lead to additional networking costs, complexity, and friction on both the supply and demand side to join the network. Generally, the supply side is much easier to build out than the demand side, but both need to match in the long run for the network to be sustainable.

Permissionless Integration: With data stored on a shared ledger, it is possible to create integrations and give rewards to certain communities or groups of people without having to get permission from the other organisation. This is possible to do with the current siloed systems as well but requires significant effort on the business development side and coordination between the organisations. What is the cost of all those employees working on the integration, legal fees and other friction that comes with this? Quite high, I would assume.

Summary

The compute overhead of blockchain networks (or any decentralized network) should not be considered in isolation, as compute costs are only a part of the total cost of a solution. Any attempt that tries to increase efficiency and reduce the total cost of a service or product should be considered, and as we’ve seen - building on blockchain networks can enable that.

Some of these costs are easier to quantify, whereas others are more difficult and depend on user preferences. It is even possible that it will be accepted to pay a premium for verifiable compute for certain use cases - especially in low-trust environments.

That said, we should try our best as an industry to quantify and communicate the total cost savings and other benefits that blockchain-based solutions can bring. In addition, by shifting some attention away from the compute overhead and development of infrastructure, we can (hopefully) build more applications that end users care about.

While there is still a lot of work to be done on the infra side to increase scale, reduce costs and increase privacy, we shouldn’t forget that the end goal is to build great applications for end users. Infrastructure is not the product, it’s a means to an end.